Introduction to Hadoop Training:

Hadoop Training stores huge number of data sets and procedure that data. Hadoop testing is free and it is java based encoding outline. It is very useful for storing the huge quantity of data and mainly designed for the processing purpose and Hadoop Training will take a large number of dataset in single input like all at once, process that data, and write a large output. Hadoop Training is a distributed file system. It can be run on your system.

Hadoop Online Training Outline Details:

IdesTrainings gives the best training on this Hadoop Training with the experts trainers.

- Mode of Training: We provide Hadoop Online training and also Hadoop corporate training, Hadoop virtual web training.

- Duration Of Program: 30 Hours (Can Be Customized As Per Requirement).

- Materials: Yes, we are providing materials for Hadoop Online Training.

- Course Fee: Please Register in Website, So that one of our Agent will assist You.

- Trainer Experience: 10 + years.

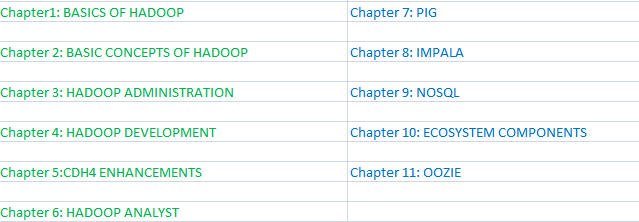

HADOOP TRAINING COURSE CONTENT:

Overview of Hadoop Training:

- Now a day’s huge amount of data storing becoming so difficulty because data quantity increasing but that much storage are not available firstly there is an the big data for storing the large quantity data but it will store some data because it also have some disadvantages about this storage so the developers has introduced the Big data because it has peta byte that much data can be stored in this. We also provide the class room training of Hadoop at client permission in noida, Bangalore, Gurgaon, Hyderabad ,Mumbai, Delhi and Pune.

- The main cause for the hadoop using for example internet wants huge amount of data storing from each and every website should be in the peta byte that large amount of data will come in a particular way in that situation data storing in database will occur so much space, so we will take HADOOP for storing the large amount of data. We also give Hadoop training material and it is prepared by professionals.

- For processing hug amount of data in less time it’s not possible in big data so hadoop Training is introduced.

What is Hadoop:

- Hadoop Training is framework of tools and it is not that software to download in our computer and the frame work is mainly used for the objective of the tools and running of these applications on big data.

- Hadoop training is open source set of tools and it is distributed under the apache license, Hadoop Training is maintained by Apache. So it is Apache Hadoop training.

- Big data creating the challenges and Hadoop is addressing, challenges are created at three levels lot of data is coming at high speed. Big volume of data has been gathered and is growing and there are all the sorts of variety it’s not an organised data. IdesTrainings offers Hadoop training at reasonable

Why hadoop Training is important:

- Generating the large the data with that the process speed will not increase in that sense Hadoop has discovered.

- If we have huge data and the process speed should also be equal to that in this hadoop is the best solution for the big data.

- We must have proper storage in our local file system so better to store the data in the local file system and we can process the huge data.

- Hadoop Training knows very well how to store the huge data and also how to process huge data in less time. Big data Hadoop Online training certification also given.

History of Hadoop Training:

- Take the example Google is web search engine in this web world that means it will store the huge data. In 1990 google has came with the more data so in that time they faced very serious problem that how to store that huge data and also processing that data.

- For best result for that problem they have taken 13 years in 2003 they have given conclusion to store the data as GFS ( goggle file system) it’s a technic to store the data.

- In 2004 they have given another technique is MR (map reduce) MR is introduced as best processing technique. But the problem with this technique is google has given some description of this techniques in white paper they have given only some idea. They have not implemented that.

- After Yahoo it is also web search engine after the google , yahoo also store more data they also have problem how to store the data, where to store the data and process that data.

- For that reason they have given came with the one solution in 2006-2007 they introduced HDFS , 2007-2008 has MR but yahoo they did not implemented the HDFS ,MR with there own technique.

- Yahoo used the google description of the MR and then have given conclusion that HDFS , MR. Online sessions are provided for hadoop training with 24/7 services.

- HDFS , MR is the core concepts in Hadoop. Hadoop inventor is Doug cutting

Hadoop Training Architecture:

- Hadoop Training has some core component in that HDFS is main where the HDFS is the distributed file system. For example if there is numbers of systems are there so the we need together their network and then form a cluster.

- This some what is simple but typical hadoop cluster is network and the one column of the computer in the group of computers are one rack. Rack is very important because some of the concepts are associated with the rack.

- Rack is one of kind of the box they will fix number of computers in one rack and the each given individual power supply and also dedicated network switch.

- If there is any problem with the power supply with the rack all the computers within the rack can go out of the network. All the switches of the rack connected to the course everything will be on the network, so we call it as the hadoop cluster.

- HDFS is designed with the master/slave architecture in the architecture there will be one master and all other will be slaves, Hadoop mater will be called as the name node. Slaves are called data node.

- Name node means it will manage and stores all the names, names of the directory and the names of files. Data nodes will store and manage all the data of the file.

- HDFS is the file system we can create the directory and the files using the HDFS assumes that we are creating the large file in HDFS, there will be three things in the hadoop like hadoop client, hadoop name node, hadoop data nodes.

- The hadoop training client will send the request to the name node that want to create the file and the client will also supply the target directory name and the file name on receiving the request the name node will perform the checks like directory already exists , file doesn’t exist , client has right permission to create the file.

- Name node can make these verify because it will maintain the image of the entire HDFS namespace into memory it is in memory FS image. If all the checks are done the name node creates an entry for the new file and return success to the client.

- File name creation will be completed but it is empty and we haven’t written the data to the file yet so the client will create an FS data output stream and start writing data to the stream.

- FS data output stream is the hadoop streamer class and it will do many work in internally and the data will be buffers locally until the data is reasonable.

- 128 mb we call it as block HDFS data block if there is one block of data the streamer reaches out to the name node asking for the block allocation. It is like asking the name node where to store the block.

- The name node doesn’t store the data, name node know the free disk space at each and every data node. With this name node can assign the data node to the store that block.

- So the name node will perform this allocation and it will send back data node to the streamer. Streamer will know where to send that data block, streamer starts to send the block to the data node.

- If any file is larger than the one block the streamer will again reach out to the name node for the new block allocation. Name node will assign some other data node so the next block will go to different data node.

- Once the file writing complete name node commit all the changes. Hadoop Admin and developer is also important role in the Hadoop Training.

Big data:

- Big data refers to huge volume of data that cannot be stored and process using the traditional approach within the time frame. Big data training refer to the data is in tera or giga or peta or anything that is larger than its size.

- It will not define the big data completely, even the small amount of the data can be refered as the big data. Big data has some challenges such as taking data, and data source, data storage etc. There will be some problems in the Big data using so to overcome that problem hadoop training has introduced.

- We are producing the huge amount of the data like e commerce like that connected to the internet so Big data is immortal.

- Big data is not only generating the huge amount of the data and the data is not useful if it is not able to extract something.

- So we need to extract the value of the data, Big data is not about the size of the data its about the value within the data.

- Big data customizing the website in real time based on the user performance if somebody visiting your website or yourself so the website will be aware of this.

Hadoop training Ecosystem:

- Hadoop Training has several component are created after the original hadoop with this they make the eco system much more scalable and the robust solution on any big data solution.

- Data will be stored in the storage layer in hadoop and it stored different across machines in a distributed system. All the data which is brought in hadoop is stored as the file format and it is HDFS (hadoop distributed file system).

- Mapreduce training (MR) it is mainly in the processing the data and getting some valuable results MR is the actual processing engine. Hadoop is not the creator of the data , data is created in some other system and it is store in the hadoop.

- For getting the data from some other system we need some mechanism that are sqoop and flume are there. Sqoop is the mechanism to get the data from the relational database like mysql, sql server etc, and will also work to get the data that are out of the hadoop Training into any relational system.

- Sqoop has the import and the export utility which helps in this. Data which are from the hadoop may not be relational data or structured data, to get the unstructured data from hadoop we have mechanism called s flume.

- When you visit the website all the clicks on your actions on the website that are recorded called it is as click stream into the log files

- Apache flume training are very helpful to get those logs from those log file into the hadoop and it is kind of HDFS,

- Flume very useful in the Hadoop it will get the unstructed data and there will be many techniques like kafka, spark

- Hive is important to be known it makes Hadoop very easy and MR return in the java , HIVE considered as the high level language it use SQL and it is on top of map reduce.

- Pig is created by the yahoo , main purpose of the Hadoop Hive training is to provide the high level API which can be written in some words like filter, sort like that, it will help to run the MR jobs.

- When we writes the pig scripts it will produce the MR jobs.

Hadoop Security:

Security is actually somewhat incompatible among Hadoop completions because the built-in security and available options are incompatible among newest versions. Hadoop security concepts also explain clearly.

Hadoop Testing :

Hadoop Testing it is necessary for finding out the errors in hadoop, so developer want to know about this then they become tester. Business function also depends on the output of the big data on our programs. Programs are like map reduce, hive, etc if those have any problems then the output will also be effect and this will effect on the business application. Hadoop testing training is also explain by trainers.

Conclusion of Hadoop Training:

Idestrainings provide best Hadoop online training by expert trainers. Hadoop training is so important because it will store huge amount of data and it will also process that data. Our trainers explain each and every concepts of Hadoop training. Taking Hadoop training have so many benefits. Average package for Hadoop developer will be 66-67 lakh per annum.