Introduction to Apache Spark Training:

Apache spark was introduced by the Apache Software and became one of the large data distributed processing frameworks around the world. The Spark is designed mainly to cover the wide range of workloads such as batch applications, iterative algorithms, interactive queries & streaming. Mainly, the spark is developed to cover-up a large amount of data analytics including a group of applications, interactional algorithms, and also queries streaming. All government sectors, private sectors such as Facebook. Google, Apple and Microsoft’s are using Apache Sparks. IdesTrainings provides the Best Apache Spark Training by real-time experts. The main motto of Apache Spark Training at IdesTrainings is to give practical knowledge in developing real-time data analysis and big data analytics for data scientists and data analysts. Register Today for Apache Spark Training at IdesTrainings.

Prerequisites to learn Apache Spark Training:

Before going to get started with Apache Spark Training, you must have knowledge of Java, Python, SQL, and Scala. Knowledge on Scala is most important to learn Apache Spark. Apache Spark API’s offers in three languages they are Java, Python, and Scala. But actually the basic knowledge on Java is enough to learn Apache Spark. Knowledge on Hadoop also helps you to learn Apache Spark quickly. SQL type of queries also will be handled by Apache Spark. So that SQL developers also can learn Spark. Apache Spark is the most active project of Apache. Knowledge of understanding of the framework is more important to work on Spark

Apache Spark Training Course Details:

- Course Name: Apache Spark Training

- Mode of Training: We provide Online Training and Corporate Training for Apache Spark Online Course

- Duration of Course: 30 Hrs

- Do We Provide Materials? Yes, If you register with IdesTrainings, the Apache Spark Training Materials will be provided.

- Course Fee: After register with IdesTrainings, our coordinator will contact you.

- Trainer Experience: 15 years+

- Timings: According to one’s feasibility

- Batch Type: Regular, weekends and fast track

- Sessions will be conducted through WEBEX, GoToMeeting OR SKYPE.

- Basic Requirements: Good Internet Speed, Headset

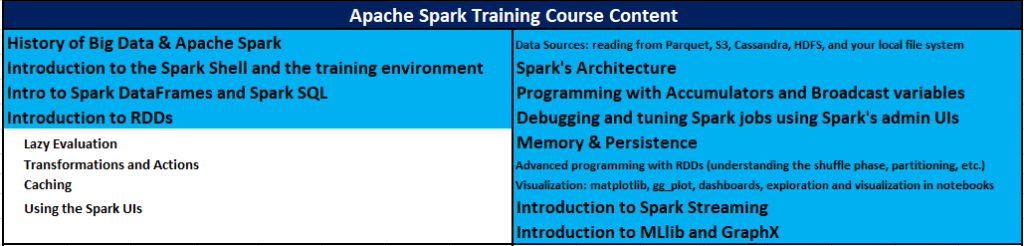

Apache Spark Online Training Course Content:

Overview of Apache Spark Training:

Apache Spark is one of the key large information which is appropriated the handling the systems on the planet, Spark which can be created by the assortment of the ways that gives the local ties to the java, scala, python and R programming dialects and it additionally underpins the SQL, spilling information, AI and the chart preparing, Apache Spark is an open source bunch figuring structure for ongoing information preparing. The primary component of Apache Spark is its in-memory group figuring that speeds up an application. Sparkle gives an interface to programming whole bunches with understood information parallelism and adaptation to non-critical failure. It is intended to cover a wide scope of outstanding burdens, for example, clump applications, iterative calculations, intuitive questions, and gushing.

What is Apache Spark?

Today, most of the companies facing problems to run their business operations innovative and collaborative. With the huge amount of shapeless data, all businesses want to enlarge their speed in data processing. Apache Spark technology is the best solutions for all big data issues. Apache Spark is also known as “Cluster Computing Engine”.

Apache Spark plays an important role when you are thinking about big data and large-scale data analytics unlike other data processing systems like Hadoop. Apache Spark is really much faster in terms of completions as well as how it utilizes resources such as memory to perform a lot of iterative computations.

Before introduced Spark, all were using Map Reduce for processing framework. At first, Spark was in process as one of the research projects in 2009. The main purpose of this project is to create a cluster management framework that supports various computing systems based on clusters. But later Spark released in the market, it has grown up and forwarded to the Apache Software Foundation in the year 2013. Now, most of the businesses are built-in with Apache Spark for empowering their large-scale data applications across the globe. The Apache Spark aims at increasing speed, simple to use, interactive with real-time data analytics.

Who should take up this Apache spark online training course?

- Software Engineers looking to upgrade Big Data skills

- Data Engineers and ETL Developers

- Data Scientists and Analytics Professionals

- Graduates who are looking to make a career in Big Data

What are the advantages of Apache Spark?

- Usability:Ability to support multiple languages makes it dynamic. It allows you to quickly write an application in Java, Scala, Python, and R.

- Memory Computing: We can quickly access the stored data which was kept in servers’ RAM. In memory, analytics accelerates machine learning algorithms iterative as it saves data to study and writes round trip from/to disk.

- Compatibility with Hadoop:Compatible with both versions of Hadoop and its ecosystem. It can run independently too.

- Advanced Analytics:Apache Spark helps Map reduce. But SQL queries, streaming data, Machine learning algorithms, and graphical data are also supported by Apache Spark.

- Real-Time Stream Processing: Spark streaming can control actual stream processing along with the incorporation of other frameworks. So, that sparks streaming have the capability is easy.

- Lazy Evaluation:One of the outstanding features of Apache Spark is miniaturization. It waits for instructions before providing a final result which saves time significant time.

- Active, Progressive: A wide set of developers are built from 100 companies. So that mails in Apache Spark always active and also the JIRA for problem tracking is available in Apache Spark. This is the most active part of the Apache repository.

What are the Features of Apache Spark?

Apache Spark is a tool that running the spark applications. Spark is 100 times quicker than Big Data Hadoop and 10 times quicker than accessing data from disk. Fortunately, Apache Spark is a boon and introduced by Apache Software Foundation. The lightning speed of the Apache Spark is very high in-memory data processing engine.

There are many features of Apache Spark. They are

- When it comes to large data processing speed we’ll always look to process our massive data as fast as possible. Spark clusters help to operate 10x faster in memory and 100x faster while running on Drive.

- Apache Sparks enlarge the speed of data processing by reducing the read and write to disc. Apache Spark helps to store that data into memory.

- Sparklets you write applications faster in Java, Scala, Python, and SQL. So that developers can write programs in their familiar programming languages. Then after able to run without trouble.

- Spark SQL helps to find out SQL Queries and streaming data.

- Spark SQL is also helped in solving complex analytics such as machine learning and graph algorithms. In addition, users can combine all these tasks smoothly in a workflow.

- Spark can handle real-time streaming with their abilities such as easy, fault tolerant and integrated.

- Once the data stores in the memory the Map-reduce processes the data internally. However, Spark can also control data in real time using Spark Streaming applications.

- Sparks light weight provides powerful API’s. So that it helps you to develop Spark Streaming applications

- Once the data stores in the memory the Map-reduce processes the data internally. However, Spark can also control data in real time using Spark Streaming applications.

- Sparks light weight provides powerful API’s. So that it helps you to develop Spark Streaming applications

- Unlike other streaming solutions, Spark streaming will retrieve lost work and provide correctly from the box without additional code or configuration.

- Reboot the same code for batch and broadcast processing, joining streaming data to historical data.

- Spark can run independently. The great feature of Spark can run YARN cluster manager of Hadoop 2 and read accessible Hadoop data. This is a great feature.

- Spark is acceptable for migrations of Hadoop applications in this case the application is really sparked.

This is less information about Apache Spark Features. If you want to know more please attend the demo for Apache Spark Training at IdesTrainings. In this Apache Spark Online Training, you can learn from basic level to advanced concepts and master the concepts of Apache Spark. Don’t miss this opportunity. Join Today!

What is the difference between Apache Spark and Hadoop?

There are different big data frameworks are available in the market. But only the hard thing is to select the right one. Apache Spark and Hadoop both are open source data processing projects initiated by Apache Software Foundation. Recently Apache Spark is more popular than Hadoop, Why because; Apache Spark is developed to improve the computational speed of data analytics. While the Hadoop is developed as an open source, Scalable framework written in Java, as a result, the speed of the processing differs significantly. But when compared to Hadoop MapReduce, Apache Spark provides better computational speed for all big data issues.

Now we go through the differences between Apache Spark and Hadoop.

Speed: The computational speed of the Apache Spark is 100 times faster than Hadoop MapReduce in memory and 10 times faster than Hadoop MapReduce on disk because there is no need to write and read the data in spark, its automatically stores in memory.

A difficulty with writing programs: Hadoop is very difficult with programs. In Hadoop developers need to write the programs, so that each and every operation will take more time to work. But in spark, there is no need to write any coding because it has many high-level operators.

Simple to Manage: Machine learning algorithms and streaming algorithms are available on the same cluster which helps Apache Spark to manage everything on cluster directly. But in Hadoop MapReduce, we should depend on different engines.

“So that we can run Apache Spark Independently as well as we can run it on top of Hadoop”

Why should we learn Apache Spark?

With increasing more number of businesses day by day, businesses that create large data at the fastest possible speed, but analyzing the meaningful business insights for leverage requires an hour to analyze data. Hadoop and Spark are big data processing alternatives. Spark became one of the big data processing environments as it provides streaming capabilities making spark as a preferred choice of platform to analyze the data with speed. Later the more number of businesses started to use Apache Spark.

There are reasons why developers showing interest in learning spark and also why should we learn Apache Spark?

Apache Spark has become one of the fastest growing big data communities across the world. Financial growth is also higher in Apache Spark when compared to other programmers.

There are three reasons why we should learn Apache Spark. They are

- To have Increased Access to Big Data you should learn Apache Spark: Apache Spark offers different types of possibilities for large data exploration and makes it easier for organizations to solve different types of problems with large data. Nowadays Apache Spark is the newest technology why because most of the data engineers and data scientists wish to work with Apache Spark. Most of the Data Scientists showing interest to work with Apache Spark because the data efficient storage in the memory can help to speed up the machine learning functions rather than Hadoop MapReduce.

- To make use of existing large data investments you should learn Apache Spark: After Hadoop’s initiative, many companies invested their technology in computing clusters. However, because Spark has been used by the many companies on existing Hadoop groups, the Apache Spark does not have any limits to investing in new computing clusters. Spark’s overwhelming similarity with Hadoop, the number of spark developers increased because they could not reintroduce computing clusters as companies could better integrate with Hadoop. This is one of the added advantages of learning Apache Spark.

- To make big money you should learn Apache Spark: According to several recent salaries, surveys found that data engineers who have experience with Apache Spark and Storm earn the highest average salaries. The people who want to settle their career in big data and wants to earn high salaries must learn Apache Spark.

What are the career opportunities with Apache Spark Training?

As we know that Apache Spark importance increasing day by day. There are plenty of career opportunities in Apache Spark. Let me explain about Spark opportunities clearly. Nowadays software developers are increasing leverage with spark framework in different languages. They are Java, Python, Scala, and SQL. Apache Spark permits developers to develop the new apps very fast and also helps them to run in their favourite languages. Some large companies hiring Apache Spark Developers such as Amazon, Microsoft, IBM, and Yahoo. The Apache Spark developers can also work in banking, Media, Software, Healthcare, and IT industries.

We also provide the Apache Kafka Training by real-time industry experts. If you are looking out for complete structured training in Apache Kafka, you should take a look at IdesTrainings Apache Kafka Training which helps you to master in Apache Kafka.

To start your career with Kafka, take a look at Apache Kafka Online Training!

Conclusion of Apache Spark Training:

As I discussed that Apache Spark is the most advanced and popular product of Apache Software Foundation deals with many machine learning programs. So, we can easily work on a large amount of data whether it’s structured or unstructured. Apache Spark is a cluster computing platform designed to be fast, speed to support any type of computations.

IdesTrainings provides Best Apache Spark Online Training with industry most experienced professionals. Because of having more career opportunities in Apache Spark it’s time to learn Apache Spark Training. Join today!